The Problem

Your labs pump out data like crazy ...

But your scientists? Still stuck copy/pasting between spreadsheets.

Manually moving data between instruments and systems, chasing down files, reformatting everything just to start analyzing — it's a huge time suck.

When your data isn't standardized or centralized: you're paying smart people to do busywork, innovation slows to a crawl, and forget about AI or cross-study insights.

Sound familiar?

Be honest:

How many hours/week are your scientists just wrangling data? And how often do experiments stall because no one can find (or trust) the results?

This isn't a resource issue. It's a data flow issue.

Our Approach

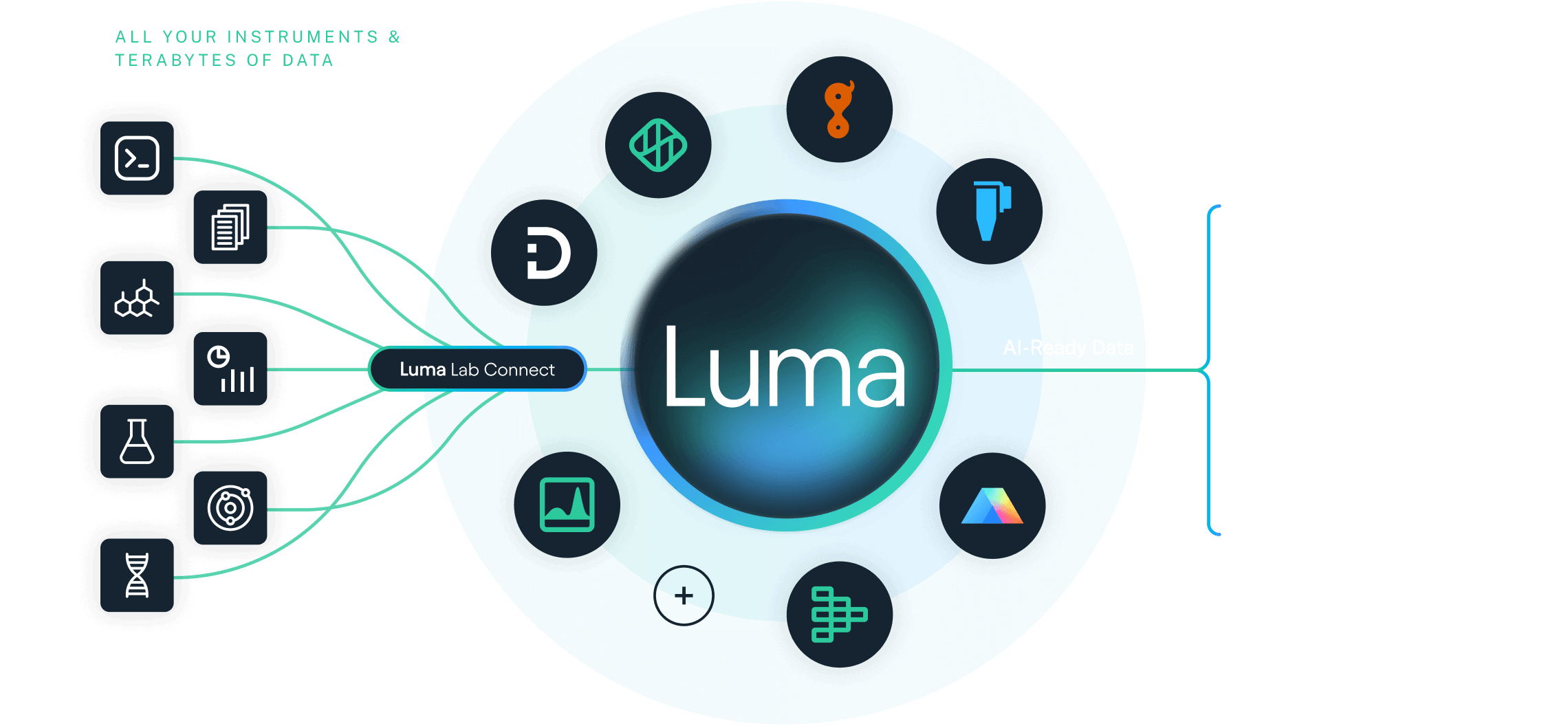

Luma Lab Connect automates the grunt work.

It connects your instruments and systems, structures all your data, and pipes it where it needs to go — clean, usable, and ready for science.

No custom code. No IT tickets. No data scavenger hunts.

Just real-time, standardized data flow across your labs.

Why This Matters

Your scientists get to spend less time typing and more time discovering.

Your org moves at a faster pace, working from better insights and a clear path to digital transformation (without a 2-year IT project).

Two quick questions

You're probably thinking:

"We already have ELNs and LIMS ... do we really need another platform?"

But ask yourself:

1. Can your scientists access clean, structured data from every instrument — right now, without manual effort?

2. If AI is the future of R&D ... is your lab data even ready for it?

If those gave you pause, it's probably worth a few minutes to let us show you how Luma Lab Connect is changing the game.

The Problem

You didn't become a scientist to chase down CSV files.

But here you are — spending hours pulling data off instruments, converting file types, renaming columns, formatting spreadsheets ... again.

You're wasting time you don't have, just to get your data into a usable place. And when you finally do? It's still siloed, inconsistent, and half your context is lost in translation.

It's exhausting. It's frustrating. It's grunt work.

Be honest:

How much time do you actually spend doing science each week?

Our Approach

Luma Lab Connect cuts the busywork.

It automatically pulls data from your instruments, standardizes it, and sends it where it needs to go — no manual cleanup, no lost context, no IT hoops.

You run your experiment. Luma gets the data where it needs to be.

That's it.

Quick question

You're probably thinking: "Okay but ... will this actually work with our instruments and file types?"

(The answer is yes) but here's the question you should be asking:

If everyone suddenly started working from the same clean, connected data - how much more could you discover?

If that hits home, it might be time to connect Luma to your bench.

The Problem

You were hired to build models. But most days? You're just cleaning data.

You've got dozens of instruments, teams logging experiments differently, data dumped in random formats — and none of it's standardized.

So instead of streamlining insights, you're debugging column headers, merging files, and trying to decode what someone meant by "Sample_0157_Final-Final_v2.csv".

And by the time the data's usable? It's already stale.

Be real:

– How much of your time goes to data prep vs. actual analysis?

– How often do you actually trust the dataset you're working with?

Our Approach

Luma Lab Connect makes lab data usable — at the source.

It connects directly to instruments, pulls the raw data, adds rich metadata, and pipes it into your downstream systems in real-time. Structured, standardized, and analysis-ready.

No more hunting for files. No more guessing what "Run A3" means. No more delays waiting for someone to clean it manually.

Why This Matters

For you:

You stop being the bottleneck and start being the enabler.

For your org:

Better data means better models, faster cycles, and a real foundation for AI/ML — not just buzzwords.

Two quick questions

You're probably thinking:

"Cool, but how clean is this data actually? And can it scale with what we're doing?"

The answers are: PERFECTLY, and yes of course.

But first, ask yourself:

Can I build repeatable pipelines off today's lab data, without writing custom scripts for every dataset?

If the science team ran a critical new assay tomorrow… how long before you could use the results?

If that stung even a little — it's time to take Luma for a spin.

The Problem

Your labs want to move faster — but every new instrument becomes your problem.

They need data out of the machines and into the cloud. They want it clean, connected, and AI-ready. But you're stuck wiring up one-off scripts, supporting brittle pipelines, and fielding support tickets for tools you didn't choose.

And the kicker? The minute you say "no," they go rogue with workarounds.

Be honest:

– How many lab systems were integrated without your team's help?

– How often do you feel like the last to know (but first to blame)?

Our Approach

Luma Lab Connect takes lab data integration off your plate.

It connects to instruments across every lab — no custom coding — and automatically transforms that data into structured, standardized formats. Fully governed. Fully trackable. Built to scale.

No more shadow IT. No more ad hoc scripts. No more firefighting.

Why This Matters

For you:

Less time babysitting data flows, more time driving real digital strategy.

For your org:

The lab gets clean data. You get centralized control, audit trails, and peace of mind.

Two quick questions

You're probably wondering:

"Will this really reduce my workload, or just create another system to manage?"

The answer is: yeah, it really will, no 'gotchas' here.

So ask yourself:

1. How many one-off lab integrations am I currently responsible for?

2. How confident am I that the lab's data is compliant, traceable, and secure?

If those questions hit a little too close — it's time to get Luma in the loop.